Hello, first let me say this is my first real ZBrush effort, and my first post here! I am really excited to join the community, and it has been my lifelong dream to do this kind of work, so I hope that these forums will become a regular haunt for me!

The current problem I am having is with displacement maps in Maya.

Here are my specs:

MacBook Pro 4gigs of ram

ZBrush 3.2 for Mac

Maya 2010

Here is the process I went through so far:

1) Modeling

1) Modeling

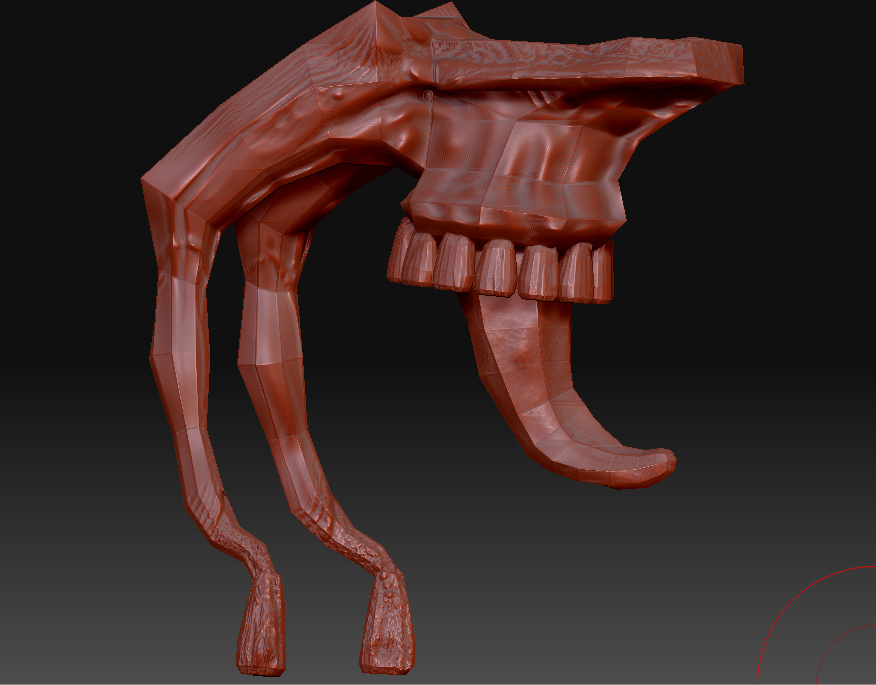

I modeled the teeth in Maya. Then I set that aside, while I modeled the rest in ZSpheres. Next I exported that mesh as an OBJ which I combined with my teeth mesh in Maya. I used the Edit Mesh > Combine option in Maya, and exported this out to OBJ again, and imported that into ZBrush.

2) Sculpting

2) Sculpting

I sculpted it, and when finished (see first attached image), I had to generate new UVs in ZBrush, I used cylindrical. I then exported the the lowest subdiv version as an OBJ, and generated the displacement map. The highest subdiv level only has 1,974 mil points… this is pretty good? average?

[ ](javascript:zb_insimg(‘203133’,‘zbc-teethmonster-maya-rigged-new-pose.jpg’,1,0))

](javascript:zb_insimg(‘203133’,‘zbc-teethmonster-maya-rigged-new-pose.jpg’,1,0))

I read up on etiquette here, and did an exhaustive search of all the maya displacement threads that already exist, so I hope that I have done my due diligence, and I’ve given everyone all the info they need to help me. One thing I noticed similar to my problem was in the FAQ “Step effect in Maya” - but that was due to 8-bit map image, so double checked, and mine is in fact 16-bit.

I read up on etiquette here, and did an exhaustive search of all the maya displacement threads that already exist, so I hope that I have done my due diligence, and I’ve given everyone all the info they need to help me. One thing I noticed similar to my problem was in the FAQ “Step effect in Maya” - but that was due to 8-bit map image, so double checked, and mine is in fact 16-bit.

Thanks in advance for your help, and I promise you that no matter what, I will not give up until I have the workflow between ZBrush and animating in Maya down pat!!!

Edit: Didn’t know how to put the images in-line with the text when I posted it!

Attachments

]

]

](javascript:zb_insimg(‘203924’,‘thank-you-gothicgrin.jpg’,1,0))

](javascript:zb_insimg(‘203924’,‘thank-you-gothicgrin.jpg’,1,0))