hello

im very noob with Zbrush, i have been modeling for 3 years in MAX and since i wanted to make some meshes for a game, i started learning about normal mapping.

i also want to make game modeling my profesion so i decided to investigate… that led me to Zbrush and its amazing way to turn about everything you do to an artist level.

but now i have this issue… get the zbrush2 and zmapper or leave it to disp maps (which i have never used but i understand the logics indeed)

i had this model that wore an armour, in my first attempt to build the normal maps, i wanted to do it quick, wanted to avoid the extra detail modeling with some height maps.

then i built the model, unwrap it and made my textures in photoshop.

i got the height to normal map from nvidia that i used to change a texture of the game, BUT it had a big problem.

when doing the calculation, the plugin didnt knew what was on the left, what on the right, so the back side was thinking that right was one way and the front side the opposite…

making a height for each side, “normalizing” it and then flipping one didnt worked either. tried everything, but never worked.

my model had this metal stripe in the chest that went from the middle front to the middle in the back, all the way around.

but when you looked at it, the one in the front seem to be protruding, while the one in the back was indenting. then in the seam where they got together there was an extrange error.

as far as i see, this method with xnormal uses the same system… so i expect to have the same kind of errors, more so because if you have a rotated UV then you wont even have up and down fixed…

i have this model that uses 500k polys in Zbrush, when i import it in max it appears with normals fliped, and horizontally flipped…

not only that, it has 1kk polys now, and it looks awful, if i want to have the same smoothness as in zbrush i have to put a meshmooth with 1 interpolation

that renders in a 4kk polys model when it was 500k in zbrush.

then i set the original mesh inside it and open the render2texture dialog, set the projection and render… allways crashes…

after i did it once, get errors, fixed it, saved, rendered… each time i place a meshmooth over the mesh it turns plane red, as if with 100% self iluminance (really plane i mean) and that redish devil anihilates my pcs ram and i could render it no more.

tried putting the meshsmooth only for render instance, but it looks as if render to texture doesnt count as render time for this…

it was all very dissapointing really, since i had seen a tut that showed it to be uber easy in zbrush 2 and i expected to be easier in ZB3

if you have payed atention, thank you =)

martin

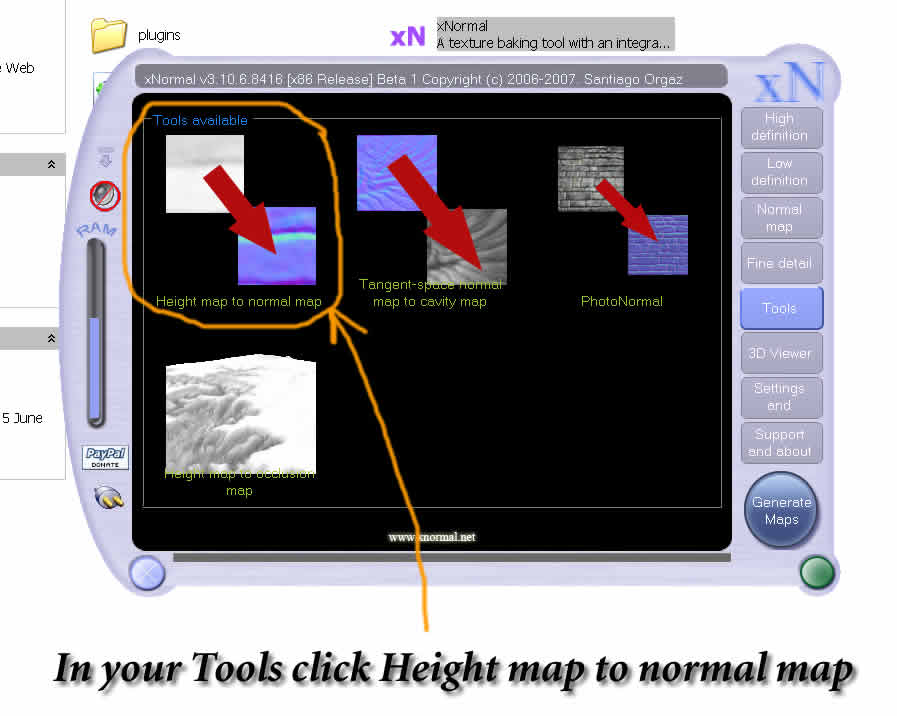

and then use XNormal to convert it in to normal map, I reckon it would be much easier comparing to bringing the models, building cages and exporting normals the hard way

and then use XNormal to convert it in to normal map, I reckon it would be much easier comparing to bringing the models, building cages and exporting normals the hard way

So I’ve tried in XSI, and at least got complete normal and

So I’ve tried in XSI, and at least got complete normal and try the disp->normal PS technique skarpunin

try the disp->normal PS technique skarpunin