Hi everyone.

I have been looking to streamline my modelling/texturing workflow to save time.

Workflow & Context

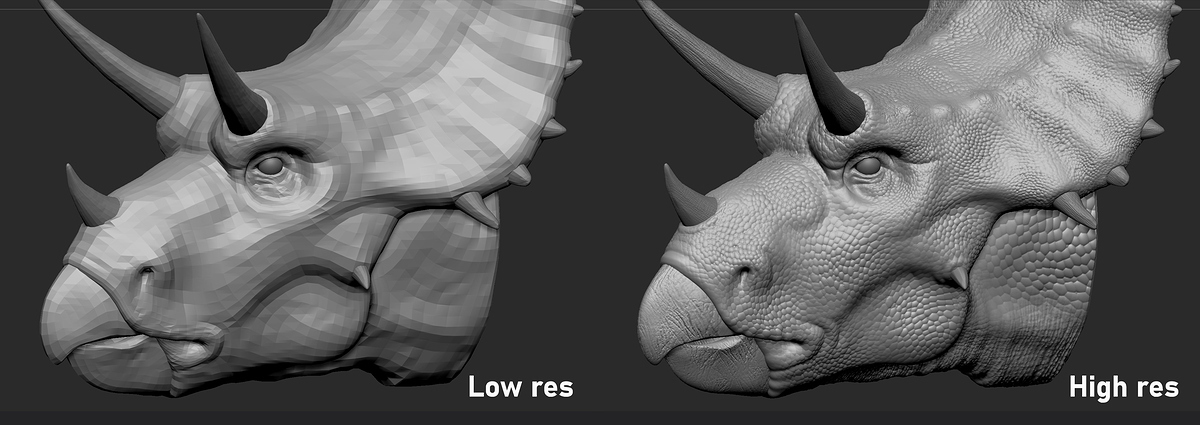

I have been considering using Zbrush to capture my high res detail in a normal map for my low res mesh. Currently I use Substance Painter for this as well as all my texturing.

These are my reasons for wanting to swap from Painter to Zbrush for Normal Map creation:

-

I’ve heard that the projections are ‘smarter’, and are less prone to errors in tight areas, such as eyelids and mouths

-

I wont have to export my high res out of Zbrush. This will be wonderful for particularly dense meshes that like to crash Painter when baking maps — as well as other problems

-

Because exporting isnt required means I wont have to run the Decimation Master and save time on this too.

Any thoughts on this workflow would be appreciated, and feel free to correct me if I got anything wrong.

BUT HERE’S THE PROBLEM:

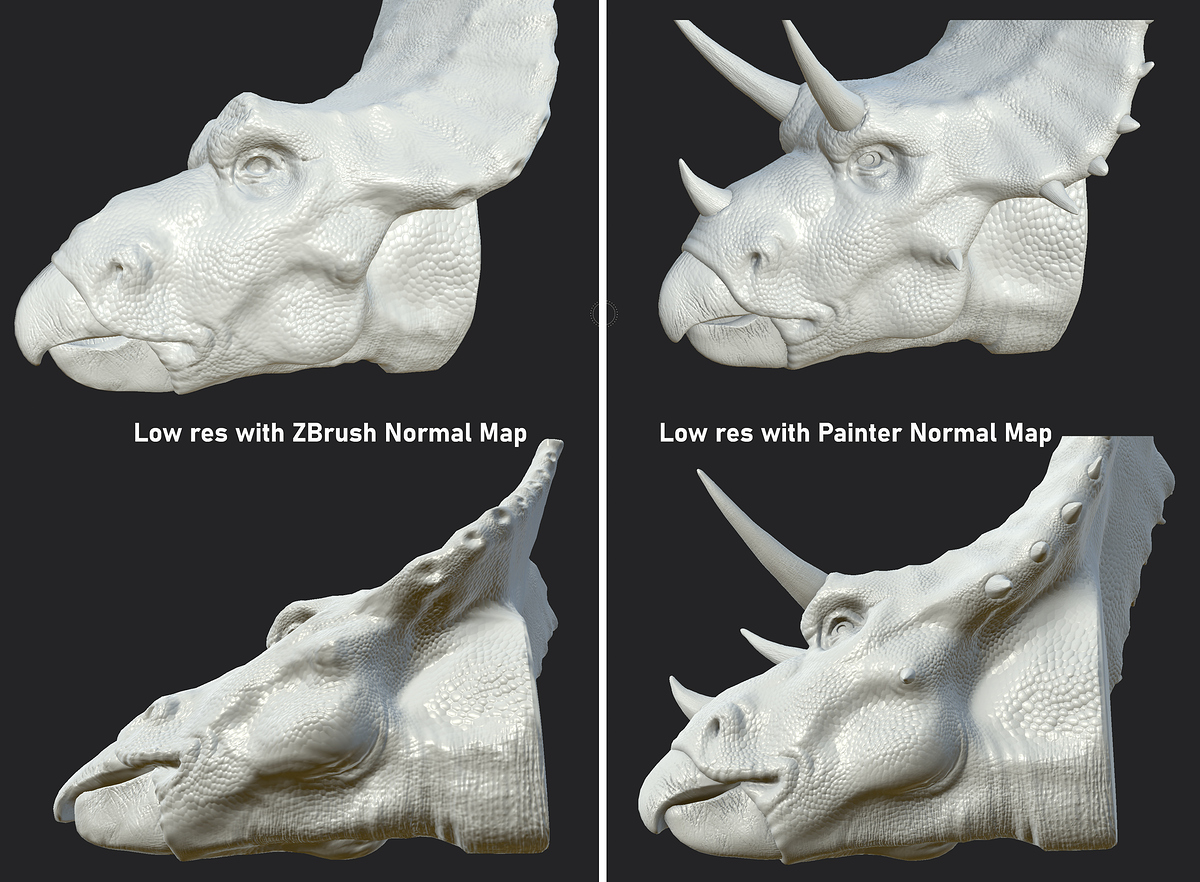

I have tested this workflow and everything works as expected except for one thing: I cant get the all the detail from my highest subdiv level into the normal map.

Nothing is actually missing, it just seems that the normal map is slightly blurred and lower resolution than the result I get with Substance Painter. Also looks lumpy in some areas.

I have tried 4k and 8k maps to no avail.

I have watched a few tutorials on people using Painter with Normal Maps generated in Zbrush. It seems like these people don’t notice the slight loss of detail or don’t seem to mind. Or perhaps, for them, the convenience of the process is worth it?

Some people might think I’m being overly fussy but I can’t justify switching my current workflow for one that saves time but achieves less detail.

Am I doing something wrong?

Is Substance Painter simply better at this than ZBrush?

If you have any experience with this I would love to hear your thoughts! Any and all comments are much appreciated!