That is not true in our experiences, we did extensive testing back in 2004 when we started to use Zbrush 2.

I’ve even exchanged some emails with the Pixologic staff and it was their recommendation to use the morph target method.

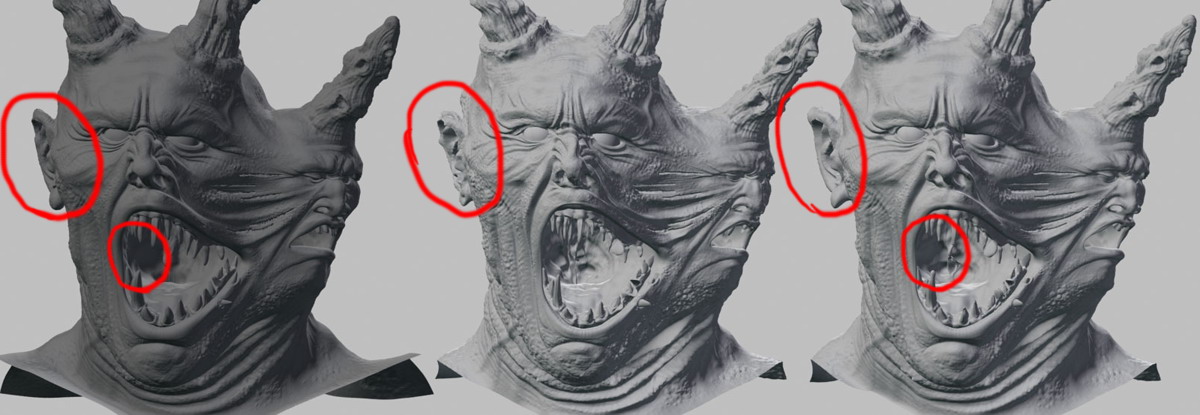

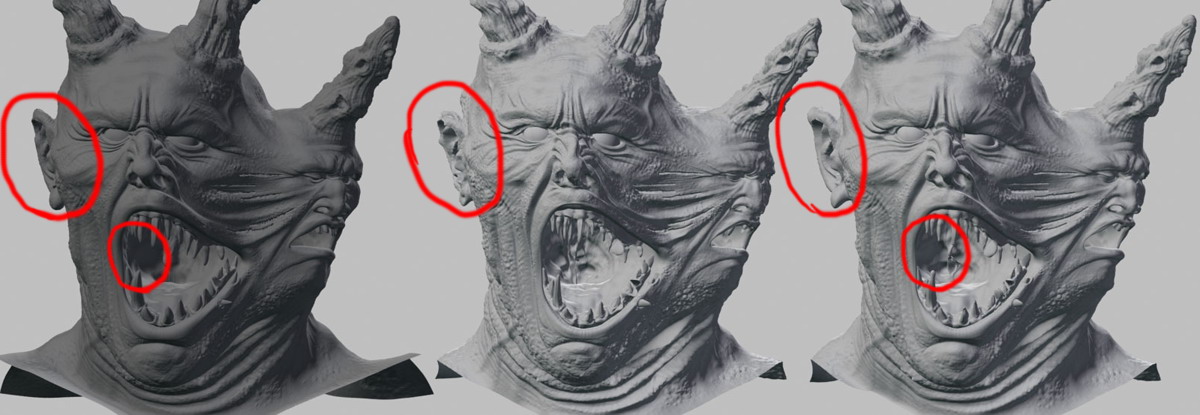

This is an image I’ve made back then:

Left one is the geometry straight out of Zbrush, the other two were using displacements generated with either the standard level 1 basemesh, or the level 1 basemesh + the Cage button.

I think the artifacting is obvious; it was a relatively low poly model so the problems were more extreme. We’ve had a lot of problem with sword edges and tips too, just look at this guy’s teeth to see what we had going on with the weapons.

But it’s the same with anything else. Just try it with a simple plane, the border edges are shrinked in Zbrush and stay where they should in Maya.

Also, Mudbox can work in the standard way, without ruining the level 1 basemesh, so it’s clearly something specific to Zbrush and has nothing to do with how you subdivide in Maya.

small_orange_diamond

small_orange_diamond !

!